Navigation

This article applies to all 7.x versions of Citrix Provisioning, including 2411, 2402 LTSR, and 2203 LTSR.

- Change Log

- Launch the Console

- Farm Properties

- Server Properties

- vDisk Stores

- Device Collections

- Prevent “No vDisk Found” PXE Message

Change Log

- 2024 Aug 6 – Encryption tab in Farm Properties

- 2023 Dec 23 – read-only administrators in 2311

- 2023 Aug 22 – Citrix Blog Post From Legacy to Leading Edge: The New Citrix Provisioning Guidelines says Avoid Modifying the Threads Per Port and Streaming Ports.

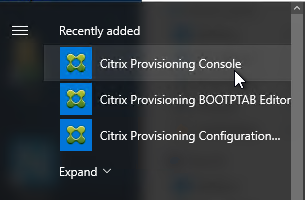

Launch the Provisioning Console

- Launch the Citrix Provisioning Console.

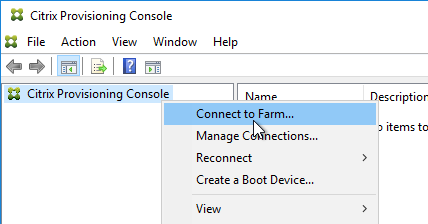

- Right-click the top-left node and click Connect to Farm.

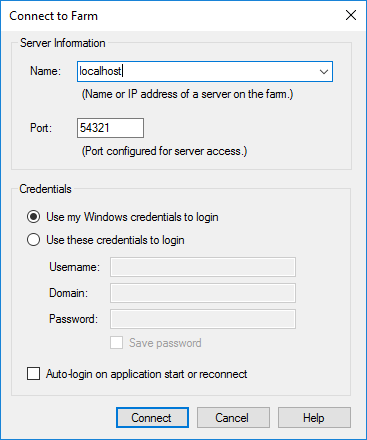

- Enter localhost and click Connect.

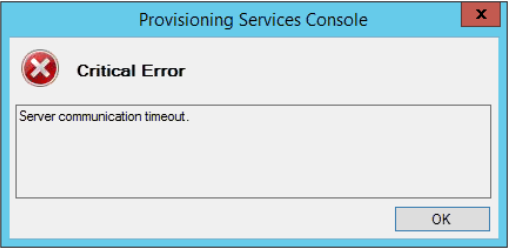

- In large multi-domain environments, or when older domains are still configured but are unreachable, if you see Server communication timeout, then see CTX231194 PVS Console Errors: “Critical Error: Server communication timeout” for a registry key to skip forest level trusts, a registry key to increase the console timeout, and a .json file to blacklist domains.

Farm Properties

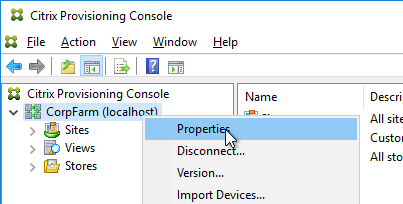

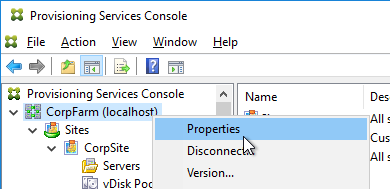

- Right-click the farm name and click Properties.

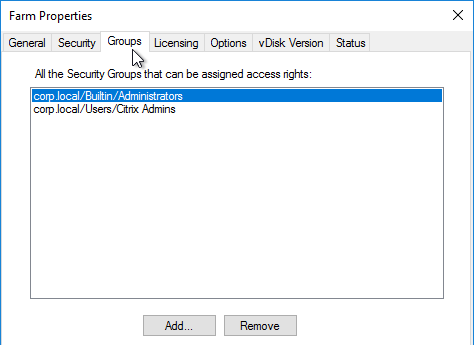

- On the Groups tab, add the Citrix Admins group.

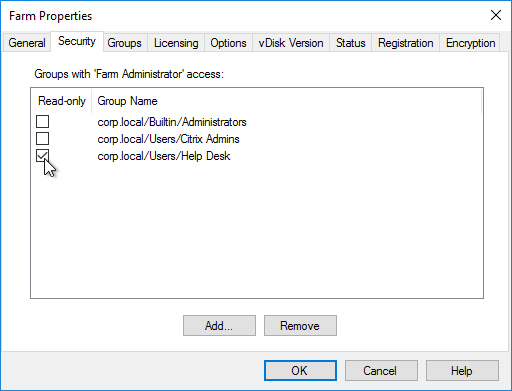

- On the Security tab, add the Citrix Administrators group to grant it full permission to the entire Provisioning farm. You can also assign permissions in various nodes in the Provisioning console. Citrix Provisioning 2311 and newer let you restrict a group to Read-only access.

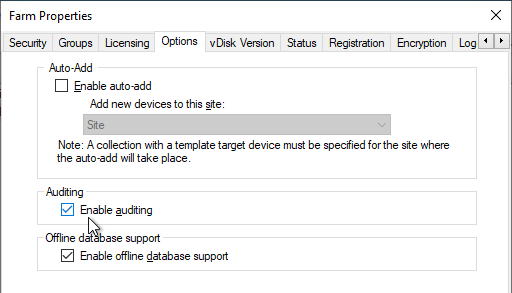

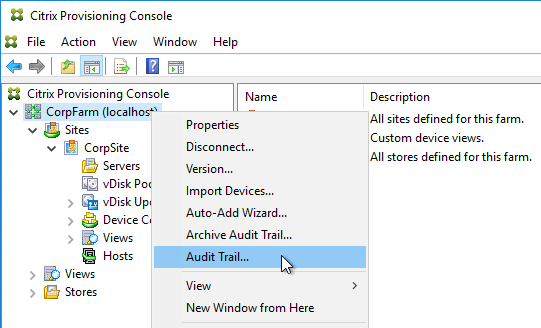

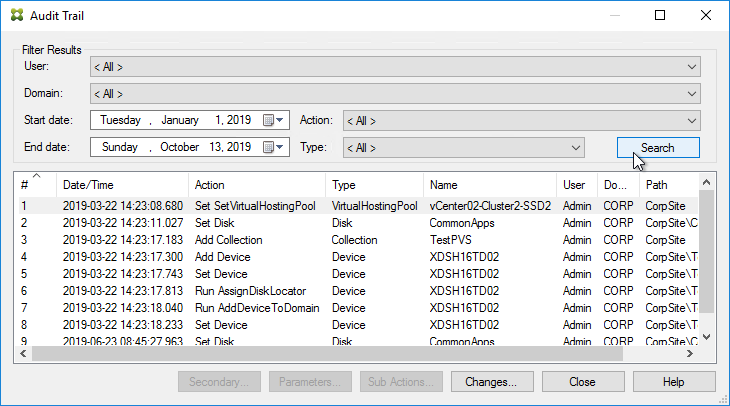

- On the Options tab, check the boxes next to Enable Auditing, and Enable offline database support.

- With Auditing enabled, you can right-click on objects and click Audit Trail to view the configuration changes.

- With Auditing enabled, you can right-click on objects and click Audit Trail to view the configuration changes.

- If you see a Problem Report tab, you can enter MyCitrix credentials. This tab was removed in Provisioning 2209.

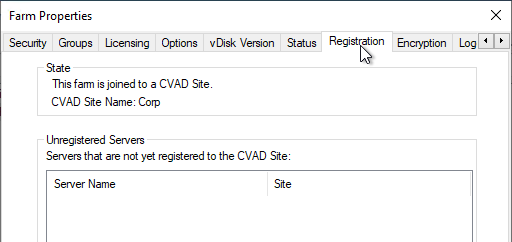

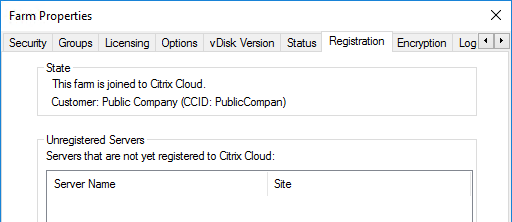

- Registration tab shows you if the farm is registered to a CVAD Site or Citrix Cloud.

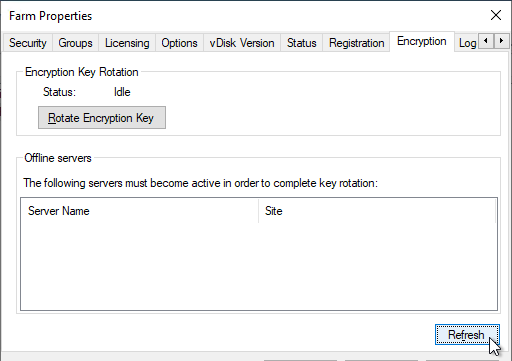

- Encryption tab shows you the status of database encryption. In PVS 2407 and newer, database encryption no longer requires registration with Citrix Cloud.

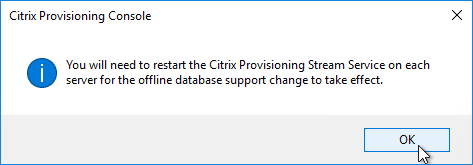

- Click OK to close Farm Properties.

- Click OK when prompted that a restart of the service is required.

Server Properties

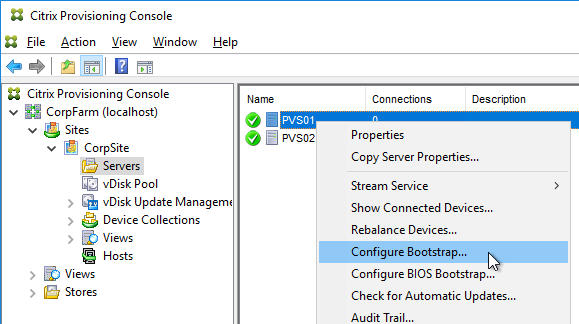

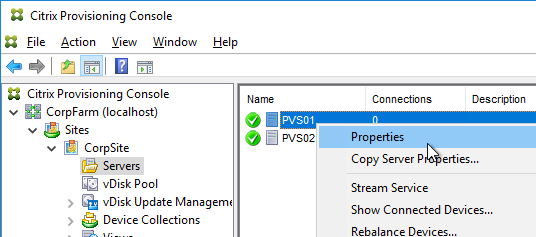

- Expand the Provisioning Site and click Servers.

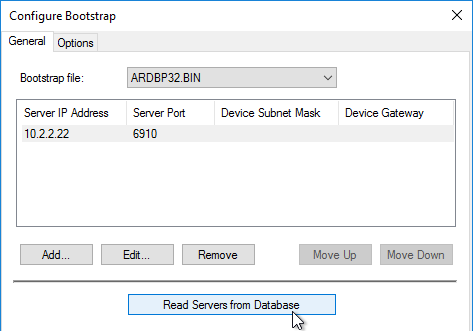

- For each Provisioning Server, right-click it, and click Configure Bootstrap.

- Click Read Servers from Database. This should cause both servers to appear in the list.

- From Carl Fallis at PVS HA at Citrix Discussions: when stopping the stream service through the console the Provisioning server will send a message to the targets to reconnect to another server before the stream service shuts down. The target then uses the list of login servers (Bootstrap servers) and reconnects to another server, this is almost instantaneous failover and can’t really be detect . In the case of the Provisioning server failing the target detects it and reconnects, slightly different mechanism and the target may hang for a short time. Check out the following article for more information https://www.citrix.com/blogs/2014/10/16/provisioning-services-failover-myth-busted for the Provisioning server failure case.

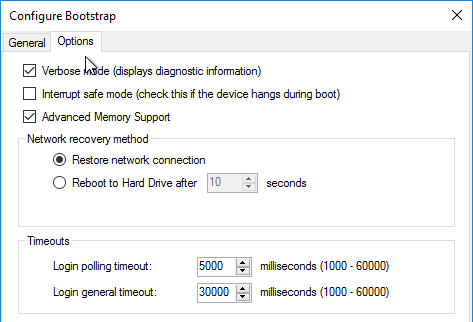

- On the Options tab, check the box next to Verbose mode.

- Right-click the server, and click Properties.

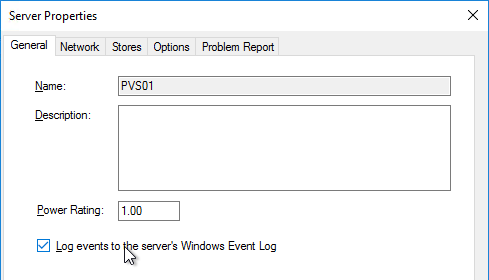

- On the General tab, check the box next to Log events to the server’s Windows Event Log.

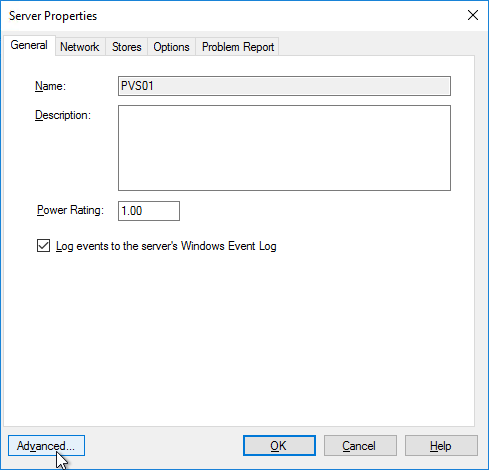

- Click Advanced.

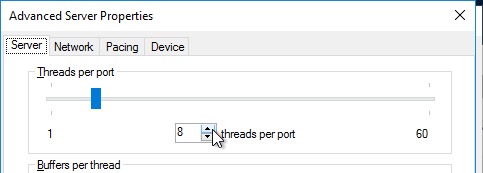

- Citrix Blog Post From Legacy to Leading Edge: The New Citrix Provisioning Guidelines says Avoid Modifying the Threads Per Port and Streaming Ports. The old guidance was for the number of threads per port should match the number of vCPUs assigned to the server.

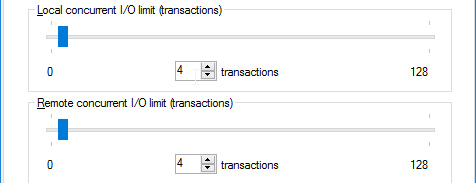

- On the same tab are concurrent I/O limits. Note that these throttle connections to local (drive letter) or remote (UNC path) storage. Setting them to 0 turns off the throttling. Only testing will determine the optimal number.

- Click OK to close Advanced Server Properties.

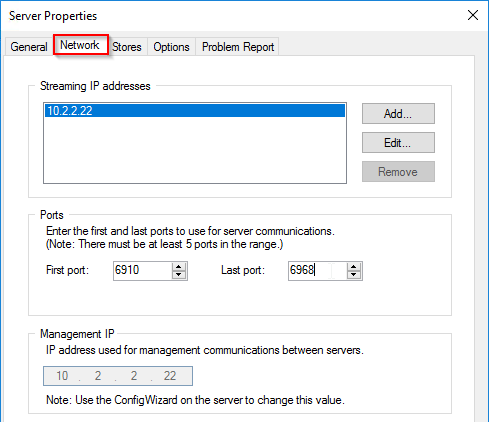

- On the Network tab, Citrix Blog Post From Legacy to Leading Edge: The New Citrix Provisioning Guidelines says Avoid Modifying the Threads Per Port and Streaming Ports. The old guidance was to change the Last port to 6968.

- Note: port 6969 is used by the Provisioning two-stage boot (Boot ISO) component.

- You can set the First port to 7000 to avoid port 6969 and get more ports.

- Citrix Provisioning 1811 and newer open Windows Firewall ports during installation, but Citrix Provisioning Console will not change the Windows Firewall rules based on what you configure here. You’ll need to adjust the Windows Firewall rules manually.

- Click OK when done.

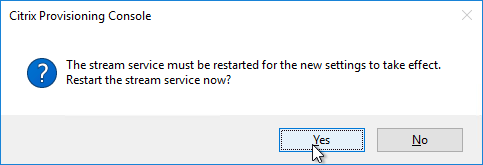

- Click Yes if prompted to restart the stream service.

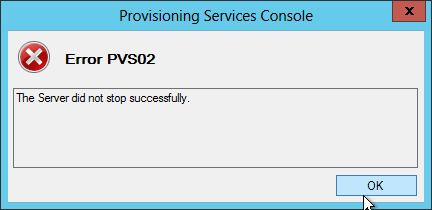

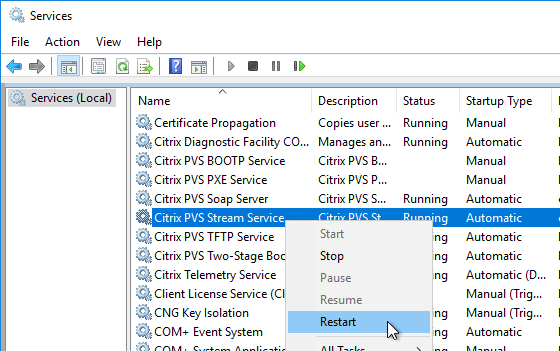

- If you get an error message about the stream service then you’ll need to restart it manually.

- From Carl Fallis at PVS HA at Citrix Discussions: when stopping the stream service through the console the Provisioning server will send a message to the targets to reconnect to another server before the stream service shuts down. The target then uses the list of login servers and reconnects to another server, this is almost instantaneous failover and can’t really be detect . In the case of the Provisioning server failing the target detects it and reconnects, slightly different mechanism and the target may hang for a short time. Check out the following article for more information https://www.citrix.com/blogs/2014/10/16/provisioning-services-failover-myth-busted for the Provisioning server failure case.

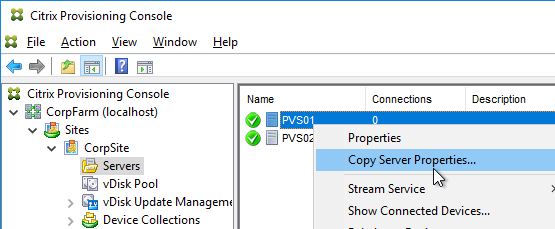

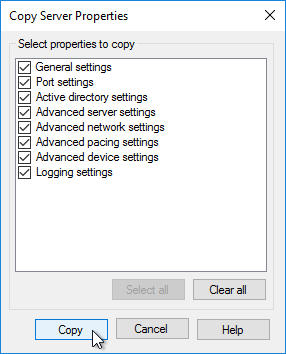

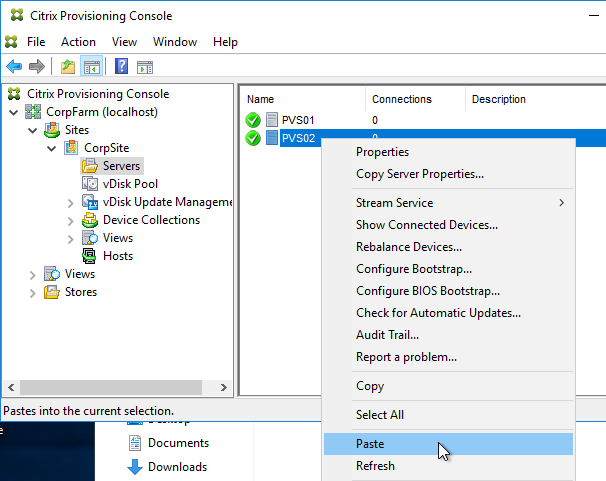

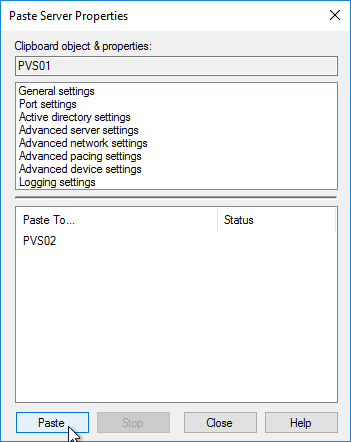

- Repeat for the other servers. You can copy the Server Properties from the first server, and paste them to additional servers.

Create vDisk Stores

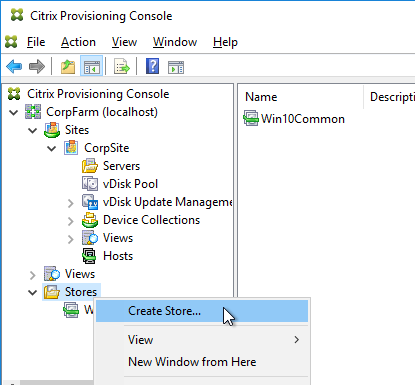

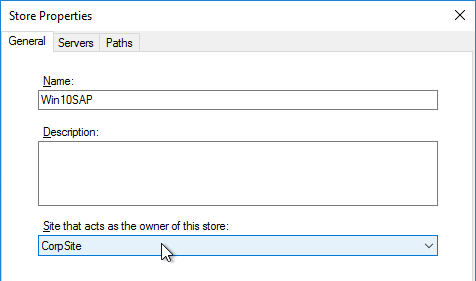

To create additional vDisk stores (one per vDisk / Delivery Group / Image), do the following:

- On the Provisioning servers, using Explorer, go to the local disk containing the vDisk folders and create a new folder. The folder name usually matches the vDisk name. Do this on both Provisioning servers.

- In the Provisioning Console, right-click Stores, and click Create Store.

- Enter the name for the vDisk store, and select an existing site.

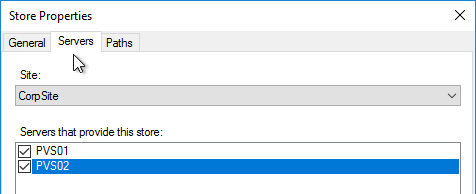

- Switch to the Servers tab. Check the boxes next to the Provisioning Servers.

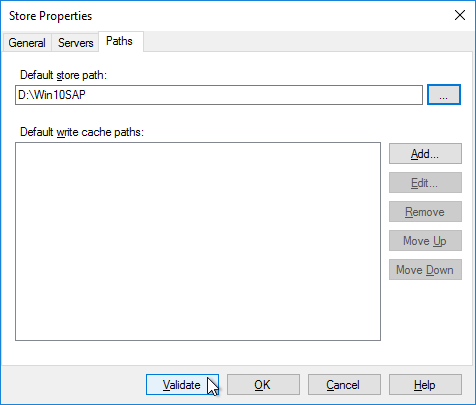

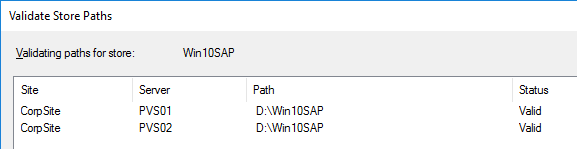

- On the Paths tab, enter the path for the Delivery Group’s vDisk files. Shared SMB paths are supported as described at Citrix Blog Post PVS Internals #4: vDisk Stores and SMB3.

- Click Validate.

- Click Close and then click OK.

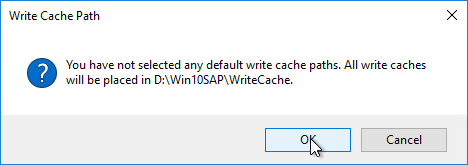

- Click Yes when asked for the location of write caches.

Create Device Collections

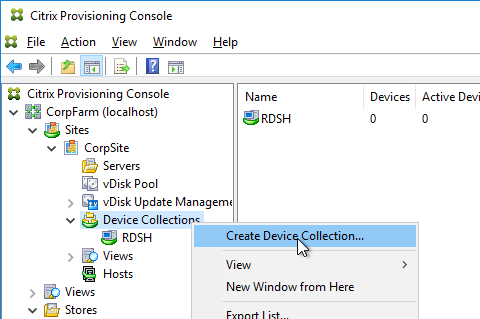

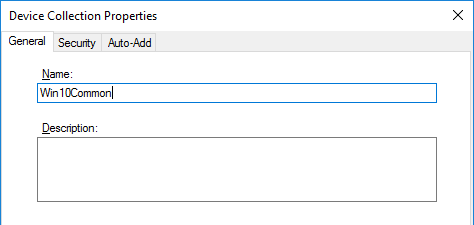

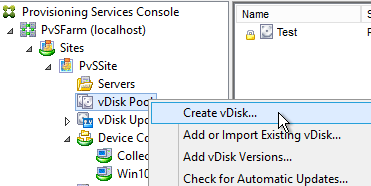

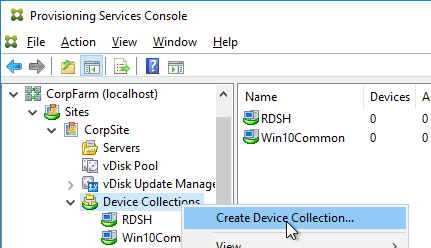

- Expand the site, right-click Device Collections, and click Create Device Collection.

- Name the collection in some fashion related to the name of the Delivery Group, and click OK.

If you are migrating from one Provisioning farm to another, see Kyle Wise How To Migrate PVS Target Devices.

Prevent “No vDisk Found” PXE Message

If PXE is enabled on your Provisioning servers, and if you PXE boot a machine that is not added as a device in the Provisioning console, then the machine will pause booting with a “No vDisk Found” message at the BIOS boot screen. Do the following to prevent this.

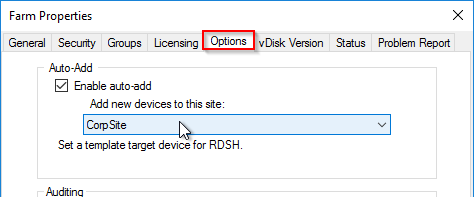

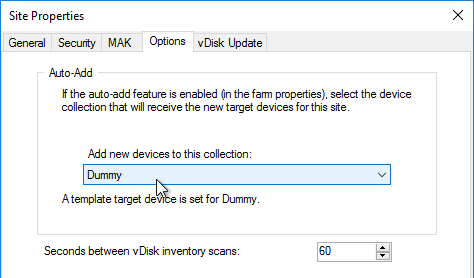

- Enable the Auto-Add feature in the farm Properties on the Options tab.

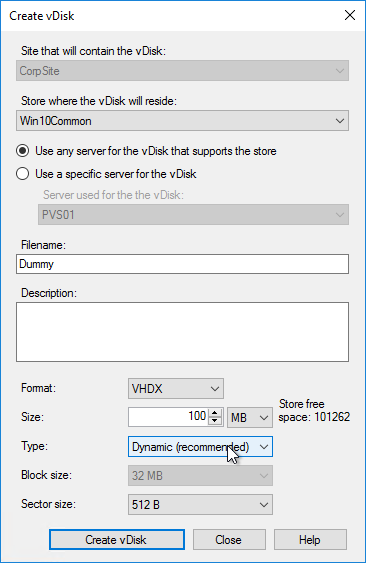

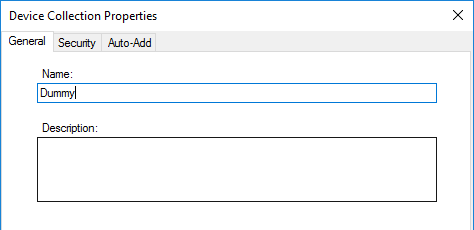

- Create a small dummy vDisk (e.g. 100 MB).

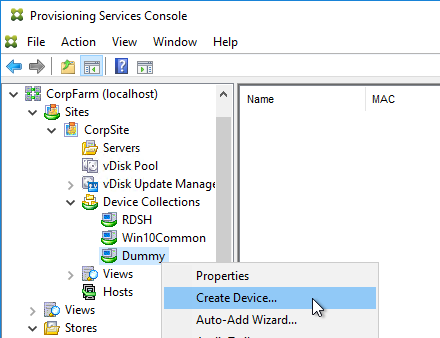

- Create a dummy Device Collection.

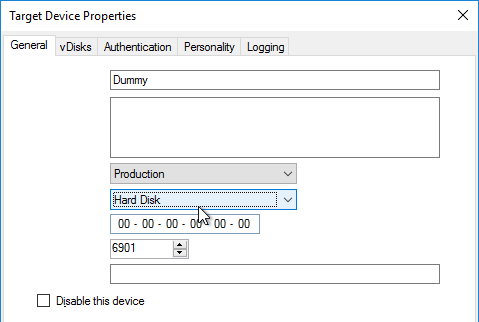

- Create a dummy device.

- Set it to boot from Hard Disk

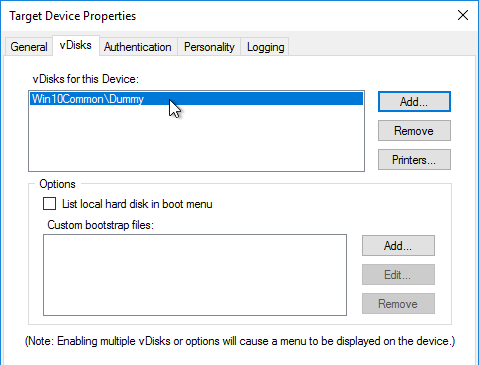

- Assign the dummy vDisk and click OK.

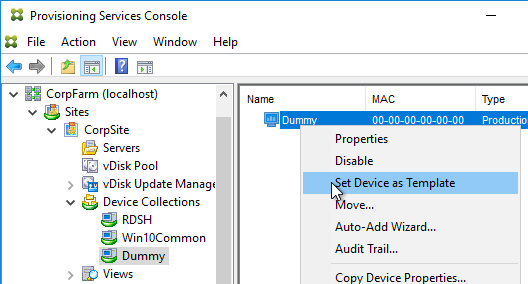

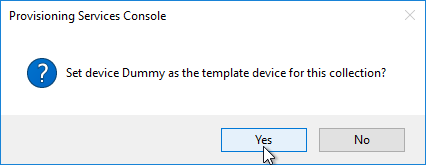

- Set the dummy device as the Template.

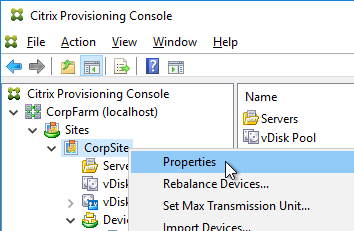

- Right-click the site, and click Properties.

- On the Options tab, point the Auto-Add feature to Dummy collection, and click OK.

Hello,

We have a Citrix infrastructure with 2 DDCs , 2 Storefronts, 2 PVS servers.

Anyway, after a DNS problem issue on client side, about which we do not have any detail, half of the VDI’s from the Delivery Groups are now “unregistered”.

We tried to reboot them from the vcenter, but the same issue persists.

We noticed that after reboot they don’t have IPs assigned, and also, when connecting to the PVS servers to check for events, we have several events: EVENT 20322 DHCP-server.

“PTR record registration for IPV4 address {“IP”} and FQDN {“hostname”} failed with error 9017 DNS bad-key”

All the unregistered machines have this entry in the Event Viewer on the PVS”

Do you have an idea about what the issue could be?

Thank you very much.

Do the computers have permissions to modify their DNS records? If you deleted and recreated the computer accounts in AD, then permissions won’t be valid.

What is your DHCP vendor?

Is anybody familiar with the MTU configuration on the PVS servers Properties? We noticed that in the PVS Console, when viewing a PVS Server Properties -> Network tab -> Advanced -> Network Tab (again), The Ethernet MTU is configured for 1506, which seems odd, you would think you would want 1500 (or 1472 per this discussion: https://discussions.citrix.com/topic/412772-pvs-determine-correct-mtu-size/ ).

Any feedback would be appreciated.

I have a provisioning server that is giving me a “server is not reachable” in the replication tab. I’ve copied all pvp and vhdx (avhdx) files from the “working” provisioning server to the unreachable one. Additionally, when I create a new maintenance image, I receive a “no servers available” when I try to boot to the image. Both provisioning servers have active connections at the moment. I updated the reg key for private mode fix and I still cannot boot to a new maintenance image. Any ideas?

Usually services need to be restarted.

When booting from BDM iso file after boot will the server update it’s available Streaming Servers?

We have 4 pvs streaming servers and we have 2 sets of target servers. We configured Boot iso’s with 2 of the 4 pvs streaming servers in the boot iso for one set of pvs target vms, and the other 2 remaining pvs streaming servers in a different boot iso for the other set of pvs target vms.

My question is, after a set of target vms boot from the iso with only 2 of the 4 pvs streaming servers defined, will the Target VM only communicate with the 2 servers listed in the boot iso it booted from for the entirety of its runtime, or will it see post-boot from windows that there are 2 other pvs servers it can fail to if the 2 listed in the boot iso go away?

During initial boot, when logging into PVS, it will only logon to the servers in the Boot ISO. After login, I think it can be streamed from any of the PVS servers.

HI Carl,

Thanks for excellent article. We have one testing farm. We have upgraded the PVS farm 1912 CU3 to 1912 CU4.

both PVS server upgraded successfully but after upgradation PVS02 console not opening. Getting error. Authorization group. we checked events but no luck.

You might have to do a CDF trace and then look at the CDF log to determine the problem.

Are the PVS Services running? Here’s a recent thread – https://discussions.citrix.com/topic/415259-pvs-services-crash-after-updates/page/2/#comment-2088618

Hello All,

Our current environment for PVS is LTSR 1912 and we need to upgrade to LTSR 1912 CU4 can anyone please provide the steps to upgrade for PVS 1912 CU4.

I have some info at https://www.carlstalhood.com/provisioning-services-server-install/#upgrade

Dear Carl.

Thank you for the very nice article.

I hope you can help me with troubleshooting installation of PVS Console on a jump/management server.

The console is installed on another server and I cannot connect to the PVS server. The error is “An error occurred communicating with the Server”. My account has full permissions over the PVS, if I login directly on the PVS server there are no issues. The communication ports are open from management server to the PVS server, I have ran procmon and saw that the TCP connection is made, send and received. I ran as well CDFControl to try to see if I get any information there and found nothing there as well.

The console is also installed on another management server and from there is working. The working management server was installed by someone else that is not available anymore to ask, so I am a bit stuck and not sure how can I debug or troubleshoot this issue.

PVS Console is exact same version like the PVS Server.

From my knowledge only ports 54321, 54322, 54323 are needed for the console. Anyway port 80 and 443 are also open for other reasons.

Please if possible to give some advice on some troubleshooting tools that I can use to figure this issue out.

Thank you .

Cornel

What do you see being blocked in the firewall logs?

Thank you for reply.

The issue is fixed.

Nothing was blocked by the firewall itself but rather by Windows local group policy on the server where I tried to run the PVS console. Maybe this information is of use to some other that might have my issue as I could not find this info anywhere.

The problem was that local group policy was blocking NTLM traffic.

The issue was resolved by modifying the local group policy: Computer Configuration/Windows Settings/Security Settings/Local Policies/Security Options :

Changed the following settings:

Network security: Restrict NTLM: Incoming NTLM traffic – Allow all

Network security: Restrict NTLM: Outgoing NTLM traffic to remote servers – Allow all

In my case in the procmon.exe, logs I could notice lsass.exe process right after the TCP connection was established from the PVS console to the server.

After the above GPO settings have been changed I monitored again with procmon.exe. the lsass.exe process was not there anymore after the TCP connection established and the console is now working.

I hope I expressed myself correctly and others might find this information useful.

Also I am not sure if this is the perfect solution, but it works for me.

Hi Carl, I have the error : VHD Library: Failed to read or write the entire VHD Header. Error number 0xE006000B.

The vdisk files are in the local drive (pvs drive D:).

Have you some idea?

Any antivirus or other security tool blocking it?

Does the PVS Service Account have write permissions to the Store path?

Yes, service account has write permissions. I’ll check security blocking.

Hi Carl,

This is great for setting up a device collection, but how do you go about adding more devices? I currently have 6 in my collection and want to expand it to 14. I do have the template that was used to create the initial devices, but do not have access to the contractor that provided a lot of assistance in setting it up.

You should be able to run the Virtual Desktops Setup Wizard again. https://www.carlstalhood.com/pvs-create-devices/#wizard

I have an issue with the configuration of the first PVS Server, I am in PVS 1912 LTSR CU1.

All is done but on the Server Tab, the server is down and not active. I have restarted all services but nothing.

What can i do to resolve the issue ?

Regards,

Hej Gotti,

i had the same issue. You must install the latest Version of “Microsoft® SQL Server® 2012 Native Client” on the PVS-Server.

https://support.citrix.com/article/CTX226526

best regards

Bjoern

Have you fixed this? I am facing the same issue. Any help would be appreciated.

Hi Carl,

I am unable to Boot Target Devices (Server 2012 R2) and struck at Windows Logo or Windows Boot Manager Status: 0xc000000e .Info: The boot selection failed because a required device is inaccessible.

This is new Installation Citrix VA&VD 7.15 LTSR CU3 – All components on same LTSR CU3

Target Devices are with VMXNET3, All components are in same Subnet.

Interestingly PVS console is unable to open in Primat PVS server after Oct-2019 Patches Installed yesterday.

Could you please help me ?

Did you remove the SATA controller from the Target Device?

Hello Carl,

we need to change the password of the user account configured in virtual host connection properties .

Did this operation request to schedule a downtime?

Regards

Nope. I think it’s only used by the Wizards, and if you use the PvS Console to perform power operations.

If you are updating PVS LTSR 7.15 from CU3 to CU4, please note that the installer doesn’t appear to update the PowerShell snap-in components. You will have to run the MSI’s individually (what I did) or remove CU3 before applying CU4 (per Citrix Support).

Hi Carl,

I have a scenario where I would like to schedule promoting new version on Vdisk in Production mode automatically to all VM’s in delivery group – I achieved this easily via PVS console by schedule promoting the disk from Test mode to prod mode, But I have limitation where all VM’s are not booted to new version of vdisk promoted as below :-

1) I run a power management to shutdown all VM’s and connected session @ 9.30 PM, but due to buffer rate 10% of VM’s stay on

2) Image gets Auto promoted to Prod mode at 1 AM

3) All VM’s start booting up at 3 AM in gradual 20% increase till 100% before 8 AM

4) Problem is those 10% buffered VM off peak are still not rebooted to get new Prod Image next morning

I guess I have two options to achieve this, but not sure what’s the best

1) remove 10% buffer rate of the delivery group for Offpeak hours ?

or

2) create a scheduled reboot of all VM’s in delivery group daily, If so will all VM’s in delivery group be restarted or the only 10 % buffered once, I am concerned will all the VM’s which are shutdown due to power management will they be turned on and rebooted ?

What would be best plan of action if you wanted to promote a vdisk version automatically with all VM’s booted to new Vdisk version, is there any limitation on this process

Thanks

Hi

What is the Citrix guideline when creating separate stores, is there a limitation on how many stores you create, is there any impact on just having one store.

Thanks

Edgar

I’m not aware of any store limitations. How many are you considering making?

I usually create a separate store for each vDisk, especially if versioning. Otherwise too many files in one folder.

While creating vdisk after restart from network I am getting following error massage.

A Provisioning Services vdisk was not found.

This usually means that the MAC address of the machine you are booting is not in the Provisioning Services Console as a Target Device. Or a vDisk is not assigned to the Target Device in the PVS Console.

I can see one machine appeared in Device Collection and assigned to newly created vdisk. but showing as Down.

Hello,

we are running a XenDesktop 7.12 farm with Citrix Provisioning 7.12 and Hyper-V 2012 R2.

All provisioned termialservers are GEN1 VMs.

Is there a best practise guide of how to configure Citrix Provisioning Services in a mixed farm with Gen1 und Gen2 VMs ?

Kind regards

Inga

HI Carl, I am receiving an error in PVS when trying to use the PVS accelerator when creating new target devices. Its setup in Xenserver using RAM only and i receive the error when trying to use both the streamed setup wizard and XenDesktop setup wizard

“An unexpected MAPI error occurred (the address specified is already in use by an existing PVS_server object)”

I’m using Xenserver 7.1 and PVS 7.14

thanks

Hi Carl,

This is rather a weird question. Is there a way to force or have PVS start to use more RAM? Our consultants specced our 2 PVS VMS out to have 200 GBs of RAM in order to stream about 15 images. With the assumption PVS would use around 10-15 GBs of RAM per image. RIght now with all 15 Images (Steaming 550 VDI Vms) we are only using 15 GBs total. I am not sure if the new version are just more optimized to use less ram or if there is anything we can do to allow PVS to consume more RAM.

Thanks

Andrew

Are the vDisks stored locally? Or are they on a remote share?

I think there’s a perfmon counter indicating cache hits. If the percentage is high, then I guess it doesn’t need to cache anything else in RAM.

The Attached VMDKs are stored on our SAN but Windows just sees them as directed attached disks.

Hi Carl

I have PVS Servers configured. The PVS1 seems to be fine. Unfortunately while installing and configuring PVS2, two IP-Addresses was erroneously configured in SystemNetworkConfiguration. This was only discovered later after PVS configuration and the not needed IP was removed.

However whenever I create a “Boot ISO” on the PVS2, the removed IP still shows when trying to use the ISO-file. It seems the information is still available somewhere in PVS2 configuration. I have checked through the “registry” but nothing found.

Do you please have idea where on the PVS-configuration or SystemFile I should look to remove the erroneous IP.

Thanks

In PvS Console, if you right-click the server and click Configure Bootstrap, is it in the list?

No, I do not have the erroneous IP in the list. Only the correct IPs for the two PVS are listed.

Hi Carl

Why wouldn’t anyone NOT enable the offline DB support? (Why isn’t it enabled by default)?

Increase the threads per port. The number of threads per port should match the number of cores in the server (including hyperthreading).

Do you mean the cores of the provisioning server Virtual Machine or really the physical vSphere host ?

Some PvS servers are physical. But yes, I meant the VM.

Carl, when going to a server, and clicking Show Connected Devices, do you know of a way to export that data? I was thinking with MCLI-GET, but I don’t see the option.

Maybe MCLI Get DeviceStatus? There should be a serverIpConnection column. You can probably filter the command to only retrieve devices for a specific server.

Carl,

Can you find the XenDesktop Controller address in the PVS console? if not where will that information be displayed?

PvS only talks to Controllers when you run the XenDesktop Setup Wizard. Or are you asking about the Controllers that the VDAs are registering with? If so, check the ListOfDDCs registry key on the VDA.

If we were going to run XenDesktop Setup Wizard in PvS console and didn’t have the information on the controller host or IP where would the place be to look?

Check HKEY_CURRENT_USER\Software\Citrix\ProvisioningServices\VdiWizard

Hi Carl

Thanks for excellent article. I see that you are using local folder for PVS vDisk. I have a question regarding that

I am doing design of PVS. I have 3 PVS servers and will be hosting around 5 vdisks for now (standard mode). I have not been given information on if CIFS is available or not.

Where can I place these vDisks. I can think of two options and would like to know which one is better in your opinion.

Option 1

Place vDisks locally on each PVS server. Configure DFS-R in each PVS and let it synchronise the vdisks across PVS servers. This will avoid single point of failure but require extra space to store vDisks in each server.

Option 2

Place vDisk on CIFS SMB 3.0 share. All updates gets stored in share to which all PVS are connected to. This could be single point of failure but require less space.

I also read that by default PVS streams from disk for first target and cache vDisk contents locally in Server RAM and subsequent streams are served from PVS server RAM. Does this happens for both options?

Thanks

Matheen

1. This is the traditional configuration because it provides maximum availability, maximum performance, and less network activity. But yes, extra disk space.

2. Where I’ve seen SMB shares the performance seems to be lower than local storage. Also, older versions of PvS did not cache SMB vDisks but newer versions should now cache them.